Is The Singularity Still On Schedule?

I got my cure for obesity, but I still haven't got my 150 year extended lifespan...

Technological singularity - Wikipedia

The first person to use the concept of a "singularity" in the technological context was the 20th-century Hungarian-American mathematician John von Neumann.[5] Stanislaw Ulam reports in 1958 an earlier discussion with von Neumann "centered on the accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue".[6] Subsequent authors have echoed this viewpoint.[3][7]

I became aware of the concept of the Technological Singularity some 35 years ago when I lifted Vernor Vinge’s new novel, Marooned In Realtime, from the top of my ever-growing “to read” stack of books, probably in 1987. Even then, Vinge was a glittering star in the SF firmament, a master of the Big Idea. Some science fiction writers are lapidary in their approach - think of Ray Bradbury. Vinge prefers to work at universal scale in his imaginings, as he would later demonstrate with such blockbusters as A Fire Upon the Deep, a sort of technological War and Peace, and still one of the most intellectually awe-inspiring novels I have ever read.

Oddly, since I have mostly been known as a cyberpunk writer - my first half dozen novels were the straight quill, to the point that The Most Famous Cyberpunk Writer of Them All once called me, drunk, in the middle of the night to accuse me of “stealing his bits” - I had not read at that point Vinge’s first published intimations of his concept of the Tech Singularity, in a novella called True Names, which is generally considered to be cyberpunk’s Ur Document. See what I mean about Big Ideas? Not just conceptually fleshing out the notion of a Tech Singularity, but in his spare time envisioning cyberspace as well.

At any rate, when I read Realtime, it was my first brush with Vinge, so I had not yet read either of its precursors, The Peace War or the novella The Ungoverned. Consequently, his musings and explications on a technological singularity came as a complete - and welcome - surprise. I was noodling with some Big Ideas myself at that point in my writing career - a little something I was calling the “Matrix” in my first novel, Dreams of Flesh and Sand - and my personal life as well, so a Tech Singularity was perfect grist for my imaginative mill.

Stirred into the mix along with Vinge’s Tech Singularity were other intriguing items: Moore’s Law - the revised version of which states simply that, “the number of transistors in an integrated circuit (IC) doubles about every two years.” To most people at that time, the dry statement was meaningless, but to a science fiction writer steeped, at least to a certain degree, in the hard sciences, the implications were enormous. Moore revised his initial projection, made in 1965, which “posited a doubling every year in the number of components per integrated circuit,[a] and projected this rate of growth would continue for at least another decade.” Had that actually held true, we would have hit a tech singularity a couple of decades ago. His revision, however, continues to generate robust predictions:

This chart only shows the progression through 2020, the year in which Moore himself forecast that the rate of increase would finally slow. Has it?

Not really. Or rather, the source of the increase is beginning to change.

As nanoscale transistor density is hitting molecular limits, it's becoming impossible to cool circuits. Consequently, Moore's Law will taper off, which Moore himself predicted would happen after around 2020. This, however, may not spell the end of Moore's Law. Rather, we'll see Moore's Law enter into a new dimension.

The proposed "More than Moore" (MtM) methodology will continue to fuel the ITRS's roadmapping efforts.

Meanwhile, Intel itself has developed new technology, such as 3D CPU transistors or GAAFET, which is poised to create 10x processing performance and efficiency. These advances alone could lead to new CPUs that will uphold Moore's Law well beyond 2025.

Moore’s Law has been driving technological innovation for more than half a century. It had been responsible for creating the world in which we live today - computers in everything, a giga-supercomputer in your pocket masquerading as a cellphone, and all of the advances made possible in science, medicine, pharma, industry, space technology, and on and on. But, viewed in a different way, Moore’s Law is only one, albeit important, mechanism functioning along one segment of the long road to the Tech Singularity.

Moore may have derived his “law” from empirical observation and, in a way, along with Vernor Vinge, can be said to have shaped and enabled our vision of the thing. And even they were not the initial conceptual creators - that honor goes to John von Neumann. I find it fascinating to consider the intellectual firepower possessed by these Tech Singularity pioneers, but the single most influential thinker on the subject has to be yet another top-tree intellect: Ray Kurzweil, who is something of a nerd deity, but is almost entirely unknown to the general public.

The Singularity has been much like the elephant and the blind men - depending on who, or what, you are, you can make out only a part of it, and are ignorant of the thing as a whole. It has been called, mockingly, the “Rapture of the Nerds,” which is silly, since true nerds tend not to be very religious, and not much given to raptures. It has also been called blasphemy by some of the few religious figures who become aware of its possible existence.

Indeed, the Singularity isn’t new. It is the latest manifestation of the recurrent blasphemy that man will one day be greater than God. This time it is just nuanced by the fact that technology will be even greater than man. After the Singularity, machines will not only win Jeopardy; they will rule the world.

If you think it is a bit much to throw around the word blasphemy, consider this summation by Grossman of Kurzweil’s ultimate aspirations for advanced computing applied to the human genome: “Indefinite life extension becomes a reality: people die only if they choose to. Death loses its sting once and for all. Kurzweil hopes to bring his dead father back to life.”

Perhaps you have joined me in a chill.

Or perhaps not. Of course, other blind men see a different part of the Singularity elephant, and reach precisely opposite conclusions. While some denounce the notion as atheistic blasphemy against their One True God, others decide that “Singularitarianism,” that is, “the belief that a technological singularity—the creation of superintelligence—will likely happen in the medium future, and that deliberate action ought to be taken to ensure that the singularity benefits humans,[1]” is simply another form of religion, with technological rather than divine origins, possessing no really rational underpinnings. Hence the Singularity itself becomes a religious “rapture” for “nerds,” (scientists and technologists). Both sides of this techno-religious coin seem to hate each other almost as much as they hate the idea of the Singularity itself. And both are equally unfond of the man they often call the “High Priest” of the Singularity, Ray Kurzweil. (Project much?) The truth is, though, that Kurzweil has functioned much more as a prophet than a priest. Priests lead worship. Prophets predict, and educate, and warn about the consequences of their predictions.

Here’s a potted biography of the man:

Raymond Kurzweil was the principal developer of the first omni-font, optical character-recognition software, the first print-to-speech reading machine for the blind, the first CCD flat-bed scanner, the first text-to-speech synthesizer, the first music synthesizer capable of recreating the sounds of a grand piano and other orchestral instruments, and the first commercially marketed large-vocabulary speech-recognition technology.

Mr. Kurzweil has founded and developed nine successful businesses in optical character recognition, music synthesis, speech recognition, reading technology, virtual reality, financial investment, cybernetic art, and areas of artificial intelligence.

He was inducted into the National Inventors Hall of Fame in 2002, was awarded the Lemelson-MIT Prize in 2001, and received the National Medal of Technology in 1999. He has also received scores of other national and international awards, including the Dickson Prize and the Grace Murray Hopper Award. He has been awarded 12 honorary doctorates and has received honors from three U.S. presidents.

Mr. Kurzweil’s books include The Age of Intelligent Machines (MIT Press, 1990), The Age of Spiritual Machines: When Computers Exceed Human Intelligence (Viking Penguin, 1999), Fantastic Voyage: Live Long Enough to Live Forever (Rodale Press, 2004), with Terry Grossman, and his latest, The Singularity Is Near: When Humans Transcend Biology (Viking Penguin, 2005).

If you were a high functioning artificial general intelligence setting out to design a personal history for your principal prophet, it would probably look a lot like Ray Kurzweil’s backstory. Just about everything he’s been successfully involved with can be considered as some sort of precursor element of the Artificial General Intelligence-generated Technological Singularity he has labored for decades to inform us about, even as he helps to create it.

By no coincidence whatsoever, Kurzweil is:

“currently a principal researcher + AI visionary at Google — a subsidiary of the company Alphabet — where he + his team of software programmers are studying ways that computers can: process, interpret, understand, and use human language in everyday applications.”

In other words, the “Prophet of the Technological Singularity” is also the “AI Visionary” at the second largest (by capitalization) company on the planet currently engaged in the research and development of artificial intelligence, including, presumably, artificial general intelligence, the grail of the field and the keystone of the Tech Singularity as we understand it today.

Which, of course, does not necessarily mean we understand it at all, any more than we fully understand artificial general intelligence (AGI) or, for that matter, human intelligence. But if anybody should have a glimmer in these directions, you could do worse that decide that Ray Kurzweil is that guy. And to return to the original question of this essay, Ray Kurzweil thinks that the Tech Singularity is right on schedule.

In recent years, the entrepreneur and inventor Ray Kurzweil has been the biggest champion of the Singularity. Mr. Kurzweil wrote “The Age of Intelligent Machines” in 1990 and “The Singularity Is Near” in 2005, and is now writing “The Singularity Is Nearer.”

By the end of the decade, he expects computers to pass the Turing Test and be indistinguishable from humans. Fifteen years after that, he calculates, the true transcendence will come: the moment when “computation will be part of ourselves, and we will increase our intelligence a millionfold.”

These two dates - 2029 and 2045 - are the same ones he’s been publicly predicting for a while now. Here he is at SXSW in 2017. What’s significant about this is that everything that has happened between 2017 and now has not shaken Kurzweil’s predicted timetable in the slightest, not economic turmoil, not “pandemics,” not wars, not the rise of China and Russia into competitive, even threatening, status with the United States. And if you think about it a bit, he’s probably taking the correct attitude - wars, economic upheavals, plagues, the rise and fall of empires are common occurrences, almost to the point of unremarkability, on the long march of history. Many people consider them inevitable consequences of human nature, unavoidable, almost mundane.

The Tech Singularity, by its very nature, will be singular, standing apart from history as we’ve known it, and starting it, and ourselves, anew in ways none of us can fully conceive unless and until we experience them for ourselves. It will be simultaneously utterly personal and unavoidably universal in its effects. It will, to use the corniest possible phrasing, change everything. What, for instance, does human nature mean for humans whose minds are combined and enhanced with machine intelligence? What sort of nature does an entity that thinks a million times as fast as a Human 1.0 possess? The answer is, we, who are still Human 1.0, have no idea. It’s that singularity thing again.

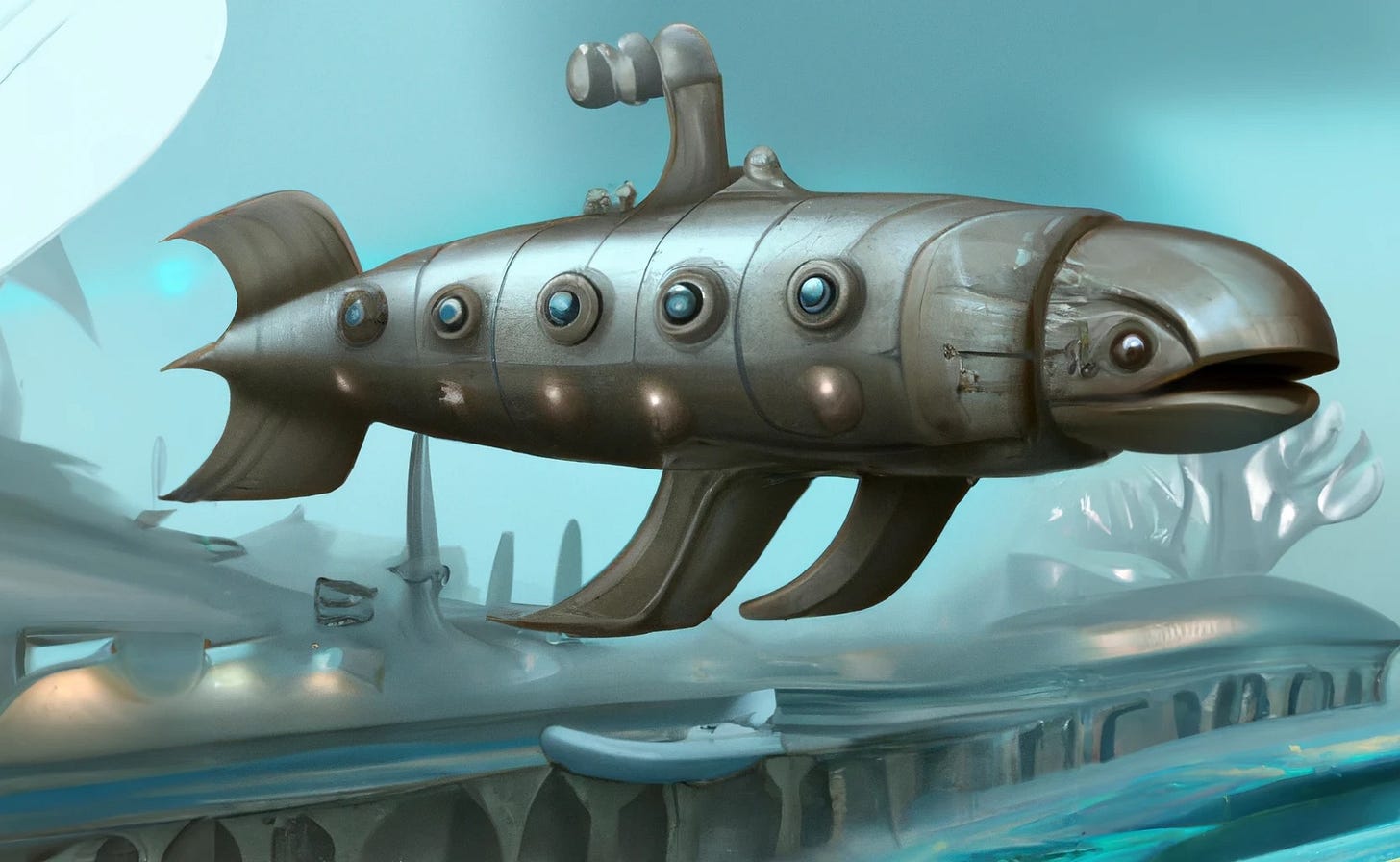

But Can It Swim?

"The question of whether a computer can think is no more interesting than the question of whether a submarine can swim."

"Computer science is no more about computers than astronomy is about telescopes."

Edsger Dijkstra

Less famously, Dijkstra also remarked, "The effort of using machines to mimic the human mind has always struck me as rather silly. I would rather use them to mimic something better," which may be an even more poignant observation than his more famous one.

Because of the Blind Men/Elephant problem, I’ve found much of the discussion about the Tech Singularity to be not especially useful, and, in fact, as a science fiction writer and futurist thinker, I’m not even sure the notion of a tech singularity isn’t something of a distraction from a larger picture. What about a religious singularity? A spiritual singularity? An alien singularity? A simulation singularity? This last one is actually a distinct possibility, and may even be an offshoot of something else’s tech singularity, or - try wrapping your mind around this one - our own tech singularity.

How would that work, by the way? Well, let’s say that our Tech Singularity arrives on schedule, we meld with our thinking machines and become like gods with unlimited computing capacity and power, and one of the things we become able to do is run simulations of entire universes and everything in them. And so we do, and one, or many, of those simulations are of humanity’s past. And one of those is what you and I are “living” in right now. And in our simulation, we are experiencing a tech singularity which may, or may not, end up resembling our original one.

I know that if I were to become one of those god-like post-singularity entities, I would certainly be interested in seeing what other options might be available on the singularity buffet, and running one, or a million, simulations of a human tech singularity might be an effective way of figuring that out. (There is a novel in there somewhere, which I may, or may not bother to tease out one of these years). Here is an entry level discussion of the concept: Are we living in a computer simulation? I don’t know. Probably.

In case you haven’t noticed, thinking deeply about essentially unknowable things like singularities is a messy process that can make your brain ache. Endlessly recursive tail-chasing will do that sometimes. In an effort to avoid such self-inflicted discomfort, I tend to limit my musings to the runup to the singularity, rather than the thing itself, which is by definition unknowable to us at the moment since we have not yet experienced it. Moreover, by its very nature it is computationally irreducible.

Better instead to try to figure out what we will likely see and experience on our way to the singularity. We can’t know if the singularity is still on schedule unless we have some idea what that schedule might be. And once again, Kurzweil displays his usefulness. He published a “Singularity Timeline” in his book The Singularity is Near, written in 2004 and published in 2005:

When checking for accuracy on the 2019 predictions, one runs into a few issues. What, exactly, does “power” mean in the context of “total power of all computers is comparable to total brainpower of the human race?” Here’s one attempt at quantification (from a 2015 article):

According to different estimates, the human brain performs the equivalent of between 3 x 1013 and 1025 FLOPS. The median estimate we know of is 1018 FLOPS.

Our best guess estimate for computing capacity of all GPUs and TPUs is 3.98 * 10^21 FLOP/s

Our lower bound estimate is 1.41 * 10^21 FLOP/s

Our upper bound estimate is 7.77 * 10^21 FLOP/s

This metric give a single human brain a median estimate of one exaflop of power.

It gives total computing power as approximately 4 zettaflops.

A zettaflop is 1000 exaflops So if I am understanding the notation correctly*, global computing power equals that of 4000 human minds today, a large shortfall to the approximately 8 billion human minds currently in existence. However, global computing power is growing faster than human computing power, and the rush to AI is throwing digital gasoline on its exponential growth.

Generative AI is eating the world.”

That’s how Andrew Feldman, CEO of Silicon Valley AI computer maker Cerebras, begins his introduction to his company’s latest achievement: An AI supercomputer capable of 2 billion billion operations per second (2 exaflops). The system, called Condor Galaxy 1, is on track to double in size within 12 weeks. In early 2024, it will be joined by two more systems of double that size. The Silicon Valley company plans to keep adding Condor Galaxy installations next year until it is running a network of nine supercomputers capable of 36 exaflops in total.

*I invite corrections on this matter. I hate exponential notation.

On the other hand, many define the prediction “autonomous vehicles would dominate the roads in 2019” as a clear miss. I don’t see that. AVs probably could dominate our roads today, but for human and regulatory queasiness about them. The technology is certainly there.

The same goes for the digital book prediction, with a slight twist: Paper books and documents are not entirely obsolete because a significant part of the reading population still prefers paper. But the technological prediction is not necessarily incorrect, since the technology needed for such dominance does, and has, existed for some time.

Ever since I discovered the combination of Moore’s Law and the Tech Singularity, it has shaped many of my own personal life strategies. I have assumed, for instance, that most problems considered intractable at any given time would eventually succumb to them, probably considerably sooner than most would expect. The unexpectedly speedy decoding of the human genome, which kick-started the biotech revolution, is an excellent example of that playing out.

In 2008 I wrote:

A prediction: a workable, effective, easily administered cure (yes, cure) for obesity will be available within five years.

As it turns out, I was a tad early - Ozempic, the treatment for T2 diabetes that was immediately repurposed off-label as an highly effective weight loss drug, was released in 2018, five years after my prediction had expired. Nonetheless, it was released, and the technology has since been greatly improved (see my own experience with an even more effective obesity cure, Eli Lilly’s Mounjaro), and now the field is exploding.

As it happens, I have also been pursuing a bootstrap strategy for increasing my lifespan, initially more my hunch and intuition than anybody’s specific program. Since most of the big brains involved in singularity prediction seemed to be focused on the mid-21st century, give or take a few years, it seemed to me that if I could survive in reasonably good health until that time, I’d have a shot at a vastly extended lifespan. It also seemed obvious to me that lesser advances leading up to that time would make my chances of living to be a hundred much more likely.

This notion was later codified by (of course) Kurzweil in his book, “Fantastic Voyage: Live Long Enough to Live Forever,” where he laid out his own personal strategy for doing just that. (He’s two years younger than I am, by the way). Published in 2004, a review at Goodreads summarized it thusly:

Fantastic Voyage is a most hopeful book. According to the authors (Kurzweil and Grossman), if you can live for another 20 to 30 years, you might be able to live indefinitely. Getting past the next 20 to 30 years is the challenge. The book is basically advice on healthy ways of living in order to live to such a time when science and technology have advanced to the point where science has eliminated threats to your health.

The authors give advice on vitamins and supplement to take to increase your odds of surviving to 2030 or 2040. They write "we will have the means to stop and even reverse aging within the next two decades (this book was published in 2004). In the meantime, we can slow each aging process to a crawl using the methods outlined in this book."

Kurzweil believes science is developing exponentially. If so, then we might live to see a time when people live for many years, or maybe indefinitely. Today few people expect to live much beyond 100 years, if at that. How will people react when others fail to die so young? At some point people are going to realize that their death is not inevitable. Will people become more careful at that time? I think so. By this time cars will be driving themselves, eliminating traffic accidents. Disease will have been eliminated.

How has the writings of Kurzweil affected my life? I no longer accept death as an inevitable outcome. While I may die at some point in time, I also believe that mankind will overcome death.Death will become a thing of the past. I hope I live to see that day.

Again, perhaps a bit optimistic. 2024 is only a few months away, and I don’t yet see anything that looks like it can “stop or even reverse death.” However, I have developed a healthy interest in the field of senolytics, and started taking Quercetin to clear out dead cells. Likewise with metformin. And I suspect that Mounjaro itself, as the “terminator” of body fat, (which is one of the primary sources of inflammation, a principle cause of many of the “diseases of aging”) will be a similarly potent aid in my living a longer, healthier life. I have taken advantage of Dr. David Sinclair’s work with sirtuins, and take one of his NAD+ nutrient concoctions. And I continue to monitor life extension research, which is yet another field considered as being in the realm of science fiction only a few years ago, but is now also exploding.

I do trust in the power of exponential growth, so while I may be early, I may not yet be wrong. Kurzweil himself revised that particular prediction in 2005, by the way, and said that the combination of nanotechnology and the exponential acceleration of other biotechnical areas of research would result in the end of human disease by 2030. Since that is still seven years in the future, I’m keeping my fingers crossed.

I do envy those in their twenties, thirties, and even forties, because most of them will still be alive, healthy, and reasonably young when these major events, including the Tech Singularity itself, occur, assuming that they do. It is only most of us Boomers who are skating right on the edge of these things, and need to take somewhat extraordinary measures to try to ensure that we will experience them. Ray Kurzweil, for instance. And me.

I hope you are one of the lucky ones.

Quite good coverage. I think I'm likely to live 10 more years, but 20 is a stretch. However, I just got my latest blood test back, and I'm in ridiculous good shape for my age. My glucose is just a tad high, and my kidney functions are a tad low, but everything else is still in the green, Sometimes robustly so.

Considering the Singularity does tend to make planning even more complicated than previously.

We're personally now in a place where I can confidently tell my wife that if our near (less than 10 years) future turns bad it will be worse for well over 90% of the US population. In that case even a large percentage of the top 10% of the wealthy will find that wealth didn't protect them. And some of those at the bottom may make it because they know how to operate in such a case. And I have made provision for family down to my great-grandchildren. My extended family (siblings and their descendants) all seem to be acting in a similar fashion. Despite moving from rural poverty in the early 50s to the middle-class and sometimes above that, we have mostly maintained the habits we learned then, and so have, in most cases, our children. With only a couple of exceptions we're all prepared to "pull back" to small acreages and live off the land and off the grid (and protect ourselves) if need be.

Given human nature I rather expect to see some sort of shakeout as the "elites" finally stumble over Reality and try to adjust. I don't see that many of them making it, if it comes to that. And the Singularity will arrive willy-nilly (a term not used often enough now). I expect it to start slowly and gain speed as the frightened elites push their foot soldiers to rather a lot of violence. I think that those who survive relatively intact will then be ready to take actual advantage of whatever the Singularity brings. And it has been my experience that few among the "ruling elite" are smart enough to understand what will be happening. It will get interesting in the worst meaning of that term.

Hang in there, Bill. I hope you make it. I hope I do, too. And if worst comes to worst you're invited to join us where we stand. Bring your mind and anything else useful you have when TSHTF.

I'm 73 now, and although my father lived to 93, I don't expect to match his lifespan. And I'm not sure I want to. About 2 years ago, I finally began to think of myself as being "old". A long youthful life might be a great thing, but I'm of the opinion that a long "old-aged" life would be a curse.